Dispatch Queues on Modern Swift Concurrency

As part of the Acknowldgement update to Reiterate, I also took advantage of the rewrite to move the app’s core audio code to the new Swift async/await concurrency model.

I say “new” even though async/await has been out for Swift for over a year now. But Reiterate has been out longer than that and there’s lots of places in the code that need to be kept up to date with the latest in development features.

Moving to async/await can be a challenge. If you’re used to writing methods with callbacks or promises or futures or whatever, the async/await model can take a while to wrap your head around. But it’s well worth it! I think the programming community in general has been trying to work out the best way to handle concurrency, and it seems like async/await is now winning the battle. In general, it seems to produce cleaner code that’s easier to rad and understand.

Apple has done a pretty good job of providing bridging structures to help developers move their code from the old model to the new.

In particular, there are the withCheckedContinuation(function:_:) family of methods that can convert a callback-based

API to one that uses async/await. That’s nice if you have a relatively simple API that’s based solely on callbacks, but Reiterate is a

large code base with some rather complex threading going on. If you’re new to the async concurrency model like I am, and you’re facing

a large project to convert, there’s a multitude of options you have that can be daunting to choose from. Do you use continuations?

Do you try to mix in some of the older threading API with the new? What about actors?

I went through several different iterations before I settled on one that seems to work for Reiterate. I’m fairly pleased with it now, and I think this is the “right” way to go about it, but getting to this point involved going down some dead ends and backtracking.

The Reiterate Juke Box

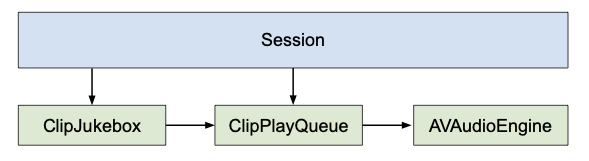

When you start a session in Reiterate, all of the clips in that session are sent to a ClipJukebox class which is responsible for playing

them at the appropriate times. The ClipJukebox handles things like randomly shuffling the clips, filtering clips if they are set to play

only during certain times, and so on.

Because there’s no limitation on the number of clips in a session, or what times they can play (besides what the user sets), it’s possible

and even likely that two clips will be assigned to play at the same time. So when ClipJukebox selects a clip to play, it gets sent to

another class ClipPlayQueue which queues the clip for play. If two clips happen to be selected at the same time, they will instead

play one after the other, which is much nicer than having them play on top of each other.

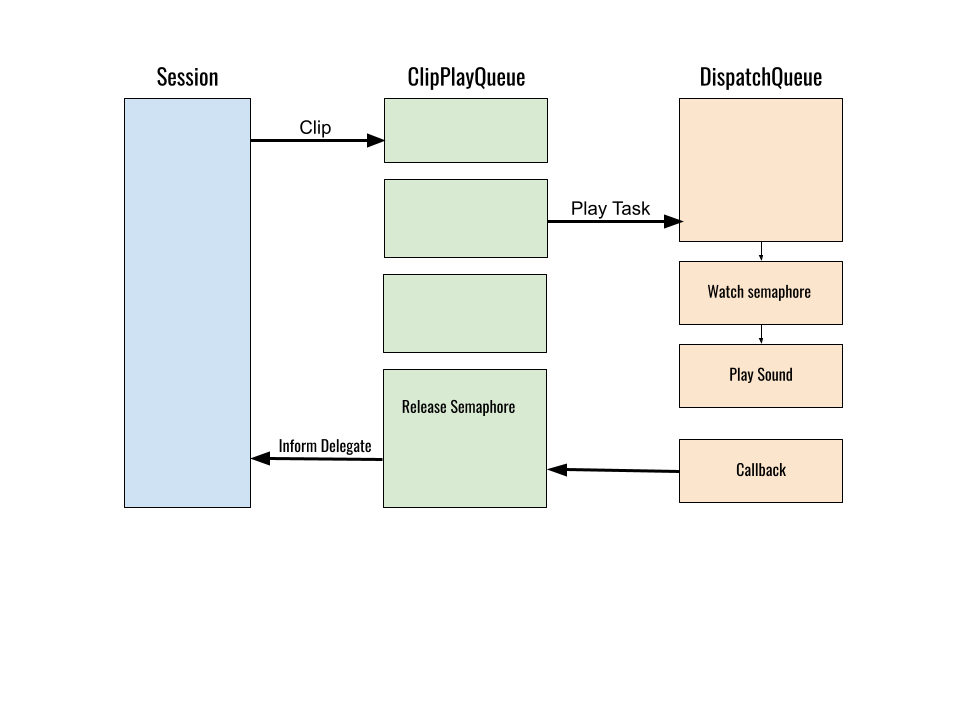

Once a clip is passed to ClipPlayQueue, it gets turned into a task that’s placed on a DispatchQueue. That task grabs a semaphore

(to ensure only one task is playing at any one time), sets up a callback to trigger when its sound finishes, and plays the sound.

The callback function looks up what sound was played, informs the delegate, and releases the semaphore.

There’s quite a bit going on there (and this is in fact simplified; I’m leaving out some of the details), so converting it to async/await wasn’t as easy as just wrapping up my callbacks with continuations.

Attempt 1: Blended Approach

When you look through the available Swift documentation, especially with the checkedContinuation methods, it looks like Apple wants

you to take a gradual approach when modifying your code. The wrappers and so forth allow a developer to make the change in bits and pieces.

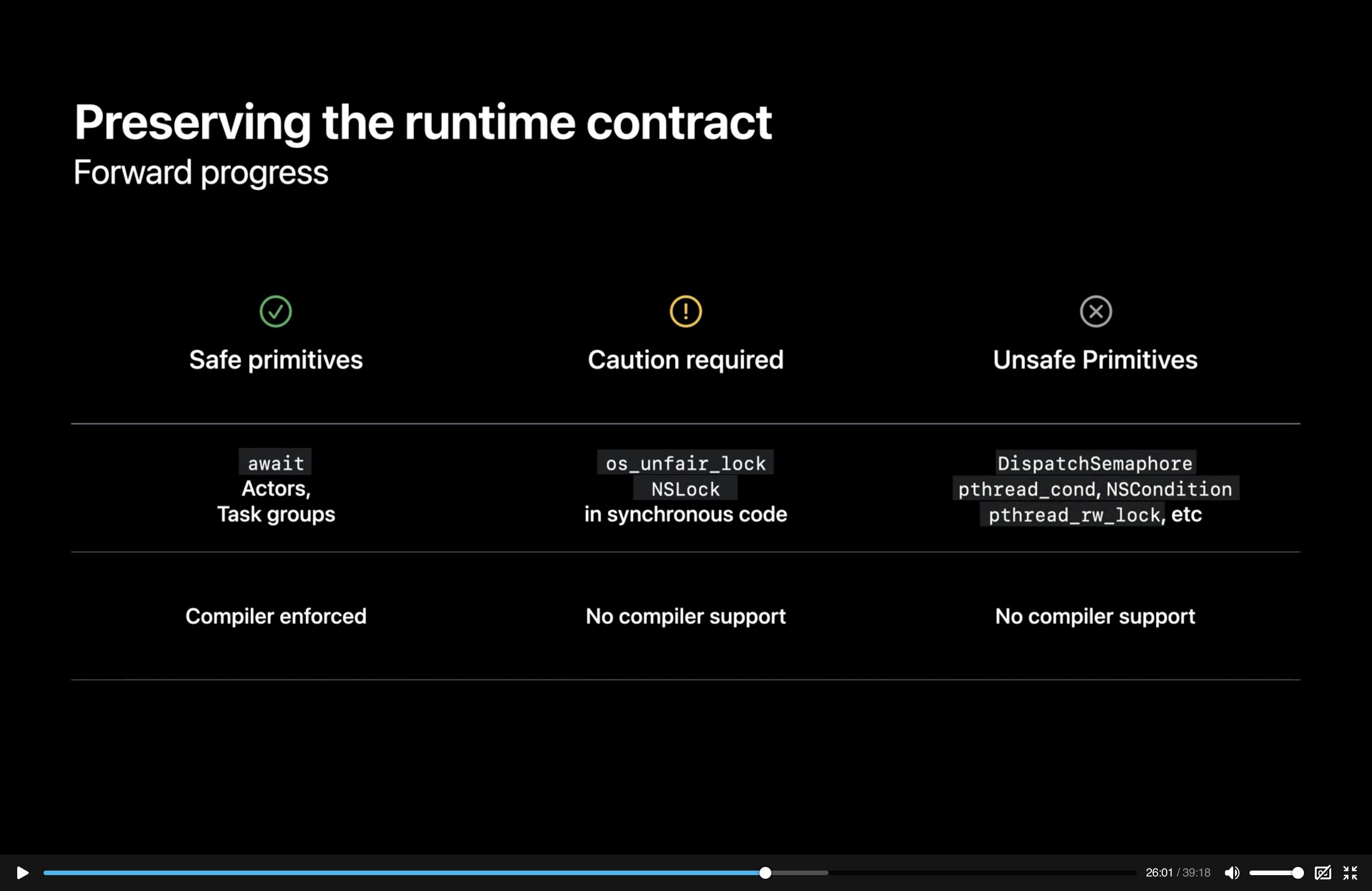

In particular, in one of the WWDC talks on concurrency, there is this slide:

Looking at that, I thought, “Okay, there’s some concurrency calls you can still use along with the new async/await, as long as you’re careful.” It gives the impression that you can migrate your code piece-by-piece.

Practically, though, it just doesn’t work that way. Trying to mix-and-match old and new concurrency models is an exercise in pain, and you’‘ll end up getting weird crashes that are impossible to debug. My advice is, if you’re moving old code to the new model, get rid of everything. Don’t try to use locks or any other previous threading API.

Attempt 2: Virtual Queue

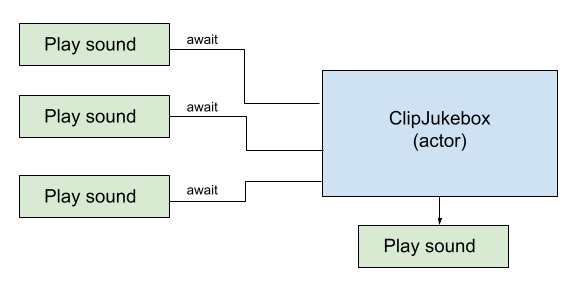

If locks and DispatchQueue are gone, how could I queue up sounds? It struck me that I could use actors to create a kind of virtual queue.

Actors enforce single-threaded access on themselves. If I made ClipJukebox an actor, and multiple threads each tried to play a sound

at the same time, one of them would block while the other played. I’m not sure how Swift internally implements actors, but I would think

it would have some sort of list of pending calls, and process them one at a time. So instead of an explicit queue, I’d leverage off the

actor mechanics to create a sort of virtual queue.

The problem with this, of course, is that actors don’t have any sort of guarantee on the order that they’ll accept new requests. With

DispatchQueue, you are guaranteed FIFO ordering, and that was important for my sound queue as well.

Actors are still useful to guarantee atomic access, but that’s all they can do for you.

Attempt 3: AsyncStream

This final attempt was what I should have started with. In my defense, I tried solutions in the order they were implemented, and AsyncStream is a relatively recent addition to Swift, added less than a year ago. But AsyncStream provides the best, most complete way to implement an FIFO queue using the new concurrency model. If you are transitioning code using DispatchQueue to the new concurrency model, you want to use AsyncStream.

With DispatchQueue, you create a DispatchWorkItem and pass it to the queue to execute after it’s completed all pending tasks. This is a

little different under AsyncStream. With AsyncStream you get a continuation when you create the stream, and you use that continuation

to yield new items to the stream, which you can loop through in another Task via for await...in.

typealias BeforePlayingClosure = (() async -> Void)

struct StreamElement {

let playable: ReiteratPlayable

let operation: BeforePlayingClosure?

}

var playableStreamContinuation: AsyncStream<StreamElement>.Continuation!

private func setupStream() {

playableStream = AsyncStream<StreamElement> { continuation in

playableStreamContinuation = continuation

}

}

// Push a ACPlayable with optional beforeClosure onto our stream

func play(_ playable: ACPlayable, beforePlaying: BeforePlayingClosure? = nil) {

playableStreamContinuation.yield(StreamElement(playable: playable, operation: beforePlaying))

}

func start() {

guard streamTask == nil else { return }

setupStream()

streamTask = Task {

for await streamElement in playableStream {

if let beforePlaying = streamElement.operation {

await beforePlaying()

}

try await ReiterateAudioEngine.play(streamElement.playable)

}

}

}

These are all methods and attributes inside the ClipPlayQueue actor. First, the queue is started by calling start(). This in turn

calls setupStream() which creates the AsyncStream and saves its continuation as an actor member. Then, we start a background Task

that simply watches the AsyncStream using for await...in.

To play a clip, it’s passed into the play(playable:beforePlaying:) method. All it has to do is wrap the given playable, and yield

it to the stream. It will get played once it gets to the top of the queue.

One last detail is the BeforePlayingClosure. Reiterate needs to know when a clip is just about to be played. It needs this because

it has to do some UI-related stuff, like displaying the clip’s title. I couldn’t figure out an easy way to execute code when an item

made it to the top of the queue, so I’m just passing that as a closure. The clip and close get wrapped into a StreamElement, which then

gets unpacked so the stream task can execute the closure at the right time.

This is the final version of the new audio stack in Reiterate. The benefits of the new concurrency model are fairly clear. Before I had a twisty implmentation full of locks, semaphores, and callbacks. Now the code that plays clips is fairly linear with a single closure. Dealing with concurrency can be tricky, but I like these new tools.